Going from zero to one is a massive change. It moves the needle from something that did not exist to something that does – an infinite level of growth. But once you’ve achieved this step, everything that follows is about optimizing and improving on what you already have.

There are a lot of different ways to achieve this sort of optimization, from market research and surveys to segmentation and usability testing, but my favorite by far, especially for digital products, is AB testing. Let’s quickly explore what it is and then dive deeper on some best practices for using it.

What Is AB Testing

To put it simply, AB testing is a way to compare two versions of something and determine which one performs better across a specific outcome. In this way, AB testing is one of the most powerful ways to validate user behavior as it relates to product changes.

Typically you are monitoring a specific metric across the two versions, such as clicks, conversion, engagement, bounce rate, and so on. By controlling the experiment across these two versions, you can get a clear-cut understanding of the impact of the specific change you made between version A and version B.

The beauty of this approach is that you are able to use real users in your testing and base your decisions on actual behavior, not just conjecture or assumption.

Common AB tests are often focused on copy, buttons, and layout, but can literally be related to any user interaction.

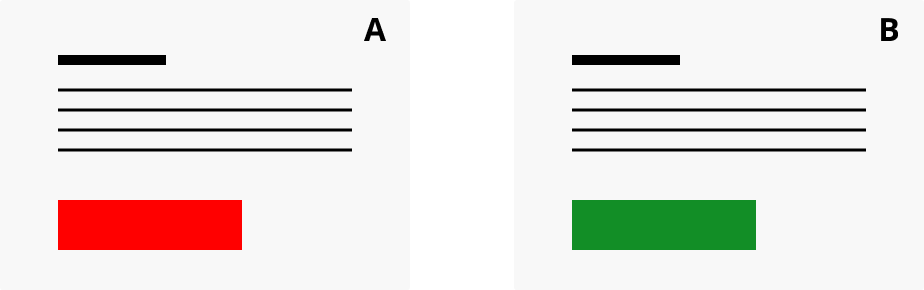

For example, if you change the BUY NOW button on your checkout from red to green and see a 5% increase in conversion, it is clear that the defining factor in this outcome was the color of the button. This controlling and measuring of changes is the key feature of AB testing.

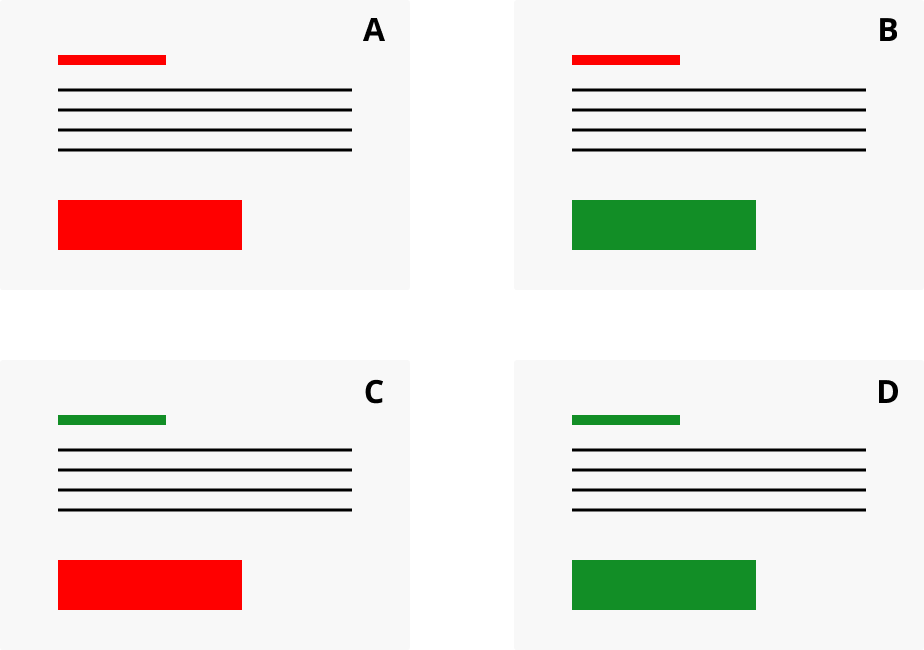

Changing a single element on a single page is the simplest form of an AB test, but things can get much more complicated. For starters, you can test multiple changes on the same page, which results in a multivariate test. In this case, you don’t only have version A and B, but also C, D, and so on. For example, if you were testing both the button and the headline, you would have 4 versions of a page.

In this manner, AB tests can scale for multiple variables, but doing so increases the complexity of the test, but also the time it takes to get results.

Beyond multiple variables, larger components can be tested as well, like entire pages that contain many changes or even completely different funnels.

In such tests, it’s not clear what elements may be driving an improvement of a metric, but you can still gain valuable insights from the results. This approach is especially valuable when exploring different UI/UX systems that can’t be tested in a stepwise manner.

No matter the type of AB test you implement, the results are always compelling and will lead you down the rabbit hole of how to improve your product.

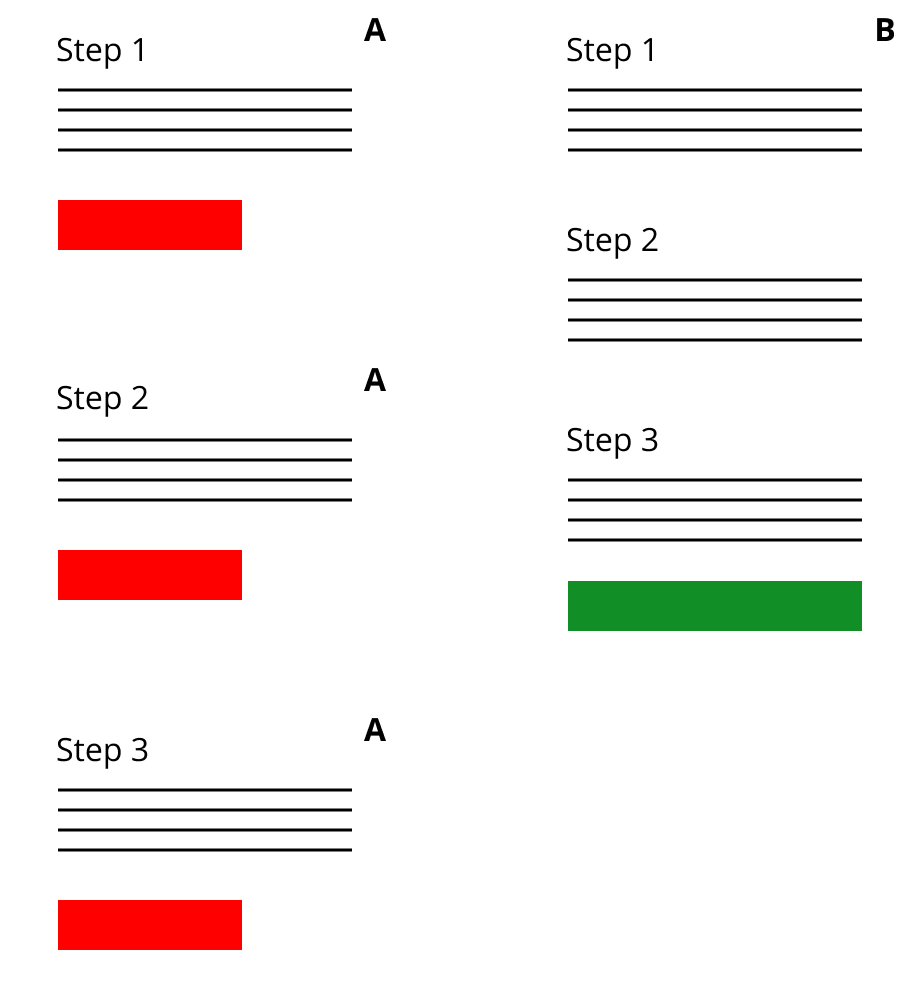

AB Test Lifecycle

While the design of AB testing is straightforward, every testing practice should also follow a simple series of steps. I approach this as 5 simple steps within an AB test lifecycle.

It begins with ideas. Once you start this practice, you’ll rarely have a shortage of ideas to test. Even so, it’s a good practice to have an ever-growing backlog of AB tests ideas on deck.

The next step is where your hypothesis comes into play. Every test needs some sort of reason or logic on what you expect it to do. Be specific and define the metric you expect to improve. Testing without any expectation is a waste of time, as you minimize your opportunities to learn. Furthermore, a hypothesis helps you create testing priorities, as you can quantify the expected impact for each test.

Once you have a test lined up, it needs to be built. It’s important to not only build the variation in this step, but also the appropriate tracking to measure your results in the most accurate way possible. Without good data, there’s no value in running tests.

After the variation is built, run the test against your control and measure the impact. Be wary of early results as they may give you false hopes – the key is running your test until you achieve significant results with a large enough sample size to draw conclusions from.

Finally, it’s time to analyze the results. You should review your metrics and most importantly, the key measure you were looking to impact with your test. Based on these results, you have either found a way to incrementally improve your digital product, or learned what not to do. Either way, that learning can be used to launch a new test and so goes the process.

The premise of AB testing offers an elegant solution towards optimization. It’s low risk, easy to understand, highly adaptable, and is based on real users. Furthermore, it’s something that shouldn’t be limited to your products alone. All things can be AB tested, from websites and apps to ads and emails, AB test as much as you can.

Best practices

Doing ab testing for the sake of it is not enough. There needs to be rhyme to the reason. In reality, AB testing is just as much an art as it is a science. Taking the following best practices into consideration will help you unlock greater value in your practice.

1. Design for Learning

The most important thing you can do when AB testing is to design your experiments for learning. Have a hypothesis, define a key metric, and leverage past tests to guide your future tests. All of this helps ensure that you are testing in a practical way that can lead to long-term gains.

2. Control the Variables

It is vital that you control the variables when you AB test. Not just the variations of the test, but also external factors, such as timing, traffic, user type, and length of the test.

A common challenge is that major design changes disproportionately impact returning users vs. new users, as the returning users are used to seeing things in a certain way. Always keep this in mind when analyzing your results.

More importantly, having a very consistent A to B comparison to draw results from is the only way to confidently use those outcomes for decision making.

3. Account for Sample Size

It is absolutely essential that you account for sample size when running tests. Early results can lead to fall positives that guide you towards poor decisions.

Instead, don’t obsess over results before they have run their course. Make sure you achieve your sample size goals and confirm your results by calculating the significance of your outcomes. A good number to strive for is 95% significance or better.

4. Choose a Key Metric

It can be easy to monitor too many metrics when running AB tests, but by doing so, the results of your test will be confusing at best. Instead, choose a key metric that you are trying to solve for and use that as your north star for a test.

While tracking other metrics is valuable, the focus should remain on the key metric defined. Examples of such metrics can include but aren’t limited to conversion rate, average order value, opt-in rate, engagement rate, and repeat rate.

5. Validate, Validate, Validate

While trusting your primary source for AB test results is smart, you should try to validate those results in as many ways as possible. This just ensures the outcomes are what you expected. Some ways of doing so include:

- Qualitative insights of the changes themselves

- Screen recordings of the test and user interaction

- Results from your tracking tool (Google Analytics, Optimizely, Mixpanel)

- Results from your own database (transactional level results)

The point is to reinforce your data and do proper analysis to confirm what the data is telling you, so you can remove any guesswork from the equation.

6. Iterate

Think of AB testing as an ongoing cycle. You should be constantly iterating on tests – learning and designing new experiments.

A good first step is to start with an MVP version of any AB test to see if there is value to be gained. It may not be perfect, but even a rough test can give you insight on whether to pursue an idea or not. If it has positive results, you can refine it, rather than spending too much time building a production-ready test that immediately fails.

7. Keep a Log of Tests

Finally, it’s important to keep track of results over time, to see what has worked and what has not. This not only gives you direction on tests to explore next, but it also ensures your learnings are not lost.

This history of tests may be the most valuable part of the entire process, as it gives you the most insight on your customers and how they behave.

Deciding what to test is one of the harder things to do and having this history is one way to help make those decisions.

Key Takeaways

AB testing is a powerful tool for any business and should be leveraged as much as possible – especially in the digital world. It’s a low-risk, high reward method for gaining insights into your customers.

As such, you should try to create a testing culture within your organization, so that such ideas towards optimization are always top of mind.

Furthermore, take advantage of various tools that can help you AB test in the best way possible, whether that’s analytics tools for tracking or calculators for ensuring significance, there are a wealth of resources at your disposal. These are some of my favorites:

- Sample Size Calculator

- Statistical Significance Calculator

- Optimizely

- Google Analytics

- Predictably Irrational

- Thinking Fast and Slow

And always remember, optimization never ends.

related reading